This topic has generated quite a bit of discussion on one of the proxy mailing lists, and it occurred to me that it may need a bit wider distribution since not all of the potential impact cases are exclusive to proxy services.

What is "iCloud Private Relay"?

It is a new feature that is part of iOS 15, iPadOS 15, and macOS Monterey that implements a privacy-preserving feature so that IP addresses cannot be mapped directly back to end users.

Conceptually, Private Relay shares many attributes with a VPN service. One major differentiating feature from traditional academic rewriting proxy services is that there is an explicit separation between incoming and outgoing traffic, so that the incoming Apple device network traffic and the network traffic leaving this service and going to web sites are handled by separate parties, and are configured in such a way that the incoming and outgoing network equipment cannot see the other side:

When Private Relay is enabled, your requests are sent through two separate, secure internet relays. Your IP address is visible to your network provider and to the first relay, which is operated by Apple. Your DNS records are encrypted, so neither party can see the address of the website you’re trying to visit. The second relay, which is operated by a third-party content provider, generates a temporary IP address, decrypts the name of the website you requested and connects you to the site. (source)

What is required to enable the "Private Relay" feature?

This is currently a monthly subscription service, and enablement is managed by individual users.

Why should I care about Private Relay?

If users enable this service, their devices will use IP addresses that are mapped to broad geographic regions (Geolocation IP mapping data is available from Apple).

This means that users on campus would no longer be using campus IPs when accessing vendor services. If those services are IP authenticated to your campus, those devices will no longer be using a campus IP.

Depending on how the campus network is configured, it is also possible that the Private Relay IP addresses could be used to access on-campus services, making the user appear as though they were off-campus. This is probably an unusual situation these days, but it is possible.

Will this impact authentication services?

Probably not, unless there are IP-based usage restrictions in place for your authentication service. The other possibility that may come into play is if your IdP implements location-based or distance-based threat calculations to detect potential bad actors.

One example that I have seen would block users that would have had to travel faster than 600 miles per hour to travel from location A to location B. The way that Apple has setup this service makes it unlikely those rules would be triggered, but until we see it in action, I would not feel comfortable saying that it could absolutely not happen.

Will this impact proxy services?

Again, probably not. Most proxy servers use cookies to keep track of user sessions, and those cookies should not be tied to the IP address of the authenticated user.

This service is not setup to randomize IP access, but rather to obscure them. As currently documented, users will be mapped to a country, state/province, or major city as their location, but there is no discussion about bouncing between geography unless the user is actually mobile. This is not much different than how a user on a phone or hotspot might appear as they were traveling down a highway today.

Unless there is an intermediate system that is involved in authentication that does take IP addresses into account, the current thinking is that this will not impact proxy users any more than mobile networks or VPNs do today. I have seen some token or HMAC based authentication services that can use IP addresses as part of the authentication, but again, this is not an IP randomization service, so the end user's IP address in use may actually wind up changing less often than it does today.

Another factor is that the service is designed to use the newer UDP based QUIC protocol. I am not aware of any rewriting proxy software today that supports QUIC, and if the destination site does not use QUIC, then the connections may not be permitted to use Private Relay at all:

iCloud Private Relay uses QUIC, a new standard transport protocol based on UDP. QUIC connections in Private Relay are set up using port 443 and TLS 1.3, so make sure your network and server are ready to handle these connections. (source)

Will this impact usage metrics?

If you rely on IP location mapping for approximations of usage by campus location, for example, you can wave that goodbye if your campuses are in close proximity to each other.

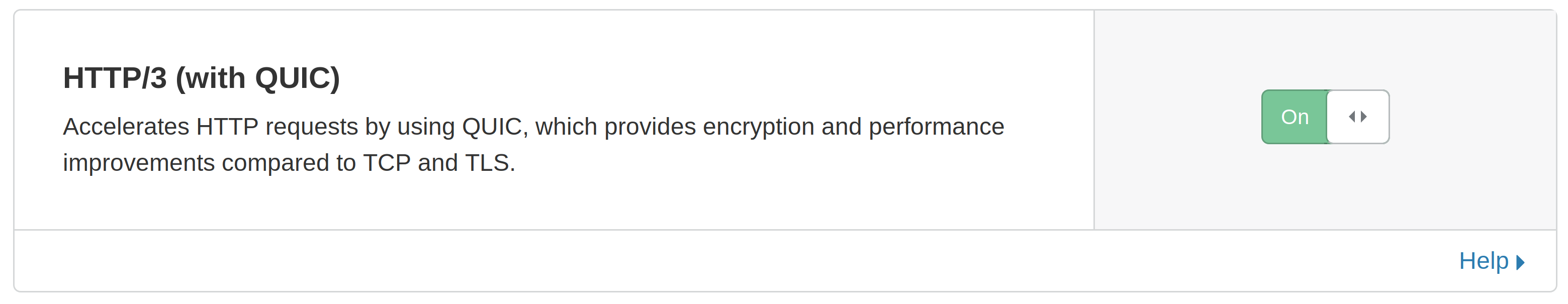

Many vendors are leveraging Cloudflare to front-end their platforms today, and if HTTP/3 (with QUIC) is enabled on the Cloudflare zone, then it that zone will be a candidate for Private Relay.

You will instead get report data down to the "region", which could be a country, state/province, or closest major metropolitan area depending on local population density.

If you have multiple campuses (or other group/entities that you wish to measure by geography) that fall within that defined "region", the metrics are going to all be lumped together for those users, and a different method for categorizing users will need to be employed.

What if we need to use campus IP authentication for services?

There is a provision for local IT to take steps to disable the use of Private Relay for the local site:

Some enterprise or school networks might be required to audit all network traffic by policy, and your network can block access to Private Relay in these cases. The user will be alerted that they need to either disable Private Relay for your network or choose another network.The fastest and most reliable way to alert users is to return a negative answer from your network’s DNS resolver, preventing DNS resolution for the following hostnames used by Private Relay traffic. Avoid causing DNS resolution timeouts or silently dropping IP packets sent to the Private Relay server, as this can lead to delays on client devices.mask.icloud.commask-h2.icloud.com

(source)

This can be accomplished by using DNS filtering, Response Policy Zones (RPZ), or similar DNS server techniques.